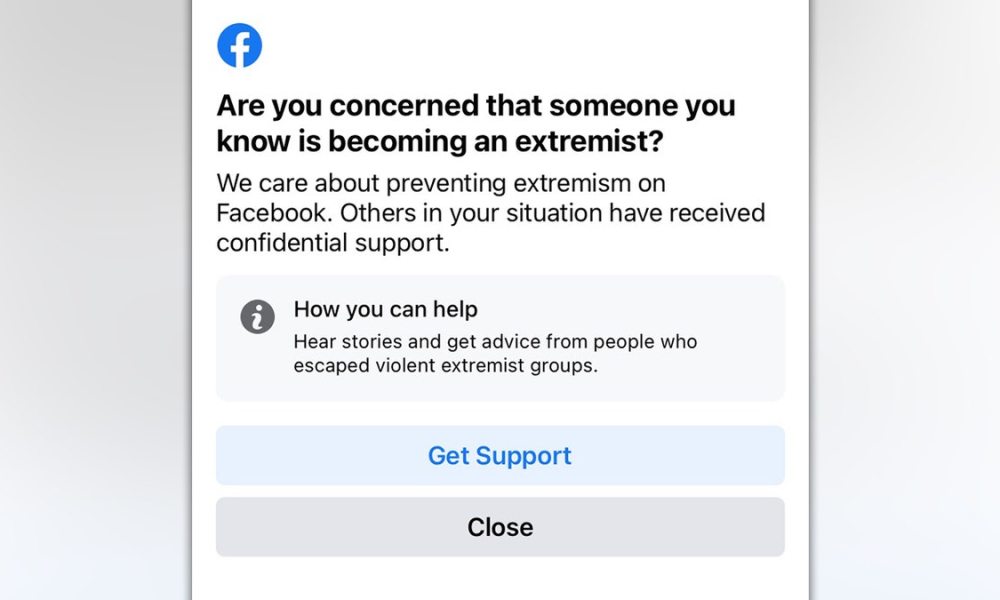

Facebook came out with a new update as of July 1st, testing a feature that asks users in the U.S. if they are worried somebody they know is becoming an extremist.

The pop-ups redirect users to a support page. “This test is part of our larger work to assess ways to provide resources and support to people on Facebook who may have engaged with or were exposed to extremist content or may know someone who is at risk,” a Facebook spokeswoman said. The tech firm said that they are working with non-governmental organizations and academic experts on this project, she added [Yahoo News].

Screenshots of the pop-ups were posted on social media. Kira Davis, a RedState editor, tweeted out about this saying her friend got these pop-ups. “Are you concerned that someone you know is becoming an extremist?” and “you may have been exposed to harmful extremist content recently.”

THESE GOP Reps Voted to Remove Offensive Statues from DC

Upon clicking the “Get Support” button, it takes you to a support group called “Life After Hate,” which, according to their mission statement, “is committed to helping people leave the violent far-right to connect with humanity and lead compassionate lives. Our primary goal is to interrupt violence committed in the name of ideological or religious beliefs.”

Another alert was also reportedly seen on Facebook by users: “You may have been exposed to harmful extremist content recently.” This was in turn followed by: “Violent groups try to manipulate your anger and disappointment. You can take action now to protect yourself and others.” The warning is accompanied by a link telling the user how to “Get support from experts.” It instructs how to “spot the signs, understand the dangers of extremism and hear from people who escaped violent groups” [BPR].

Mark Zuckerberg, Facebook CEO and co-founder, announced in 2019 that he would not be censoring political advertisements, regardless if the ads contain false information or not. “What I believe is that in a democracy it’s really important that people can see for themselves what politicians are saying, so they can make their open judgments,” he said. “I don’t think that a private company should be censoring politicians or news.”

Starting in 2019, claims began that conservatives were being censored by Facebook. These complaints only expanded in 2020, most particularly ahead of the election – leading to the removal of Donald Trump’s account. The company told Reuters it does remove some content and accounts which violate its rules pro-actively before the material is seen by users, but that other content may be viewed before it is taken down.